|

HMLP: High-performance Machine Learning Primitives

|

|

HMLP: High-performance Machine Learning Primitives

|

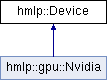

This class describes devices or accelerators that require a master thread to control. A device can accept tasks from multiple workers. All received tasks are expected to be executed independently in a time-sharing fashion. Whether these tasks are executed in parallel, sequential or with some built-in context switching scheme does not matter. More...

#include <device.hpp>

Public Member Functions | |

| Device () | |

| Device implementation. | |

| virtual class CacheLine * | getline (size_t size) |

| virtual void | prefetchd2h (void *ptr_h, void *ptr_d, size_t size, int stream_id) |

| virtual void | prefetchh2d (void *ptr_d, void *ptr_h, size_t size, int stream_id) |

| virtual void | waitexecute () |

| virtual void | wait (int stream_id) |

| virtual void * | malloc (size_t size) |

| virtual void | malloc (void *ptr_d, size_t size) |

| virtual size_t | get_memory_left () |

| virtual void | free (void *ptr_d, size_t size) |

Public Attributes | |

| DeviceType | devicetype |

| std::string | name |

| class Cache | cache |

This class describes devices or accelerators that require a master thread to control. A device can accept tasks from multiple workers. All received tasks are expected to be executed independently in a time-sharing fashion. Whether these tasks are executed in parallel, sequential or with some built-in context switching scheme does not matter.