|

HMLP: High-performance Machine Learning Primitives

|

|

HMLP: High-performance Machine Learning Primitives

|

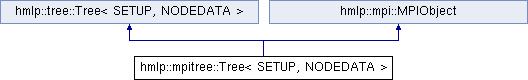

This distributed tree inherits the shared memory tree with some additional MPI data structure and function call. More...

#include <tree_mpi.hpp>

Public Types | |

| typedef SETUP::T | T |

| typedef tree::Node< SETUP, NODEDATA > | NODE |

| typedef Node< SETUP, NODEDATA > | MPINODE |

Public Types inherited from hmlp::tree::Tree< SETUP, NODEDATA > Public Types inherited from hmlp::tree::Tree< SETUP, NODEDATA > | |

| typedef SETUP::T | T |

| typedef Node< SETUP, NODEDATA > | NODE |

Public Member Functions | |

| Tree (mpi::Comm comm) | |

| ~Tree () | |

| void | CleanUp () |

| free all tree nodes including local tree nodes, distributed tree nodes and let nodes More... | |

| void | AllocateNodes (vector< size_t > &gids) |

| vector< size_t > | GetPermutation () |

| template<typename KNNTASK > | |

| DistData< STAR, CBLK, pair< T, size_t > > | AllNearestNeighbor (size_t n_tree, size_t n, size_t k, pair< T, size_t > initNN, KNNTASK &dummy) |

| void | TreePartition () |

| partition n points using a distributed binary tree. More... | |

| void | RecursiveMorton (MPINODE *node, MortonHelper::Recursor r) |

| Data< int > | CheckAllInteractions () |

| int | Morton2Rank (size_t it) |

| int | Index2Rank (size_t gid) |

| template<typename TASK , typename... Args> | |

| void | LocaTraverseUp (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | DistTraverseUp (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | LocaTraverseDown (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | DistTraverseDown (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | LocaTraverseLeafs (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | LocaTraverseUnOrdered (TASK &dummy, Args &...args) |

| For unordered traversal, we just call local downward traversal. More... | |

| template<typename TASK , typename... Args> | |

| void | DistTraverseUnOrdered (TASK &dummy, Args &...args) |

| For unordered traversal, we just call distributed downward traversal. More... | |

| void | DependencyCleanUp () |

| void | ExecuteAllTasks () |

| void | DependOnNearInteractions (int p, Task *task) |

| void | DependOnFarInteractions (int p, Task *task) |

Public Member Functions inherited from hmlp::tree::Tree< SETUP, NODEDATA > Public Member Functions inherited from hmlp::tree::Tree< SETUP, NODEDATA > | |

| Tree () | |

| ~Tree () | |

| void | Offset (NODE *node, size_t offset) |

| void | RecursiveMorton (NODE *node, MortonHelper::Recursor r) |

| void | AllocateNodes (NODE *root) |

| Allocate the local tree using the local root with n points and depth l. More... | |

| void | TreePartition () |

| Shared-memory tree partition. More... | |

| vector< size_t > | GetPermutation () |

| template<typename KNNTASK > | |

| Data< pair< T, size_t > > | AllNearestNeighbor (size_t n_tree, size_t k, size_t max_depth, pair< T, size_t > initNN, KNNTASK &dummy) |

| Data< int > | CheckAllInteractions () |

| template<typename TASK , typename... Args> | |

| void | TraverseLeafs (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | TraverseUp (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | TraverseDown (TASK &dummy, Args &...args) |

| template<typename TASK , typename... Args> | |

| void | TraverseUnOrdered (TASK &dummy, Args &...args) |

| For unordered traversal, we just call local downward traversal. More... | |

| void | DependencyCleanUp () |

| void | ExecuteAllTasks () |

| bool | DoOutOfOrder () |

| template<typename SUMMARY > | |

| void | Summary (SUMMARY &summary) |

| Summarize all events in each level. | |

Public Member Functions inherited from hmlp::mpi::MPIObject Public Member Functions inherited from hmlp::mpi::MPIObject | |

| MPIObject (mpi::Comm comm) | |

| void | AssignCommunicator (mpi::Comm &comm) |

| mpi::Comm | GetComm () |

| mpi::Comm | GetPrivateComm () |

| int | GetCommSize () |

| int | GetCommRank () |

| int | Comm_size () |

| int | Comm_rank () |

| int | Barrier () |

| int | PrivateBarrier () |

Public Attributes | |

| vector< MPINODE * > | mpitreelists |

| vector< vector< size_t > > | NearSentToRank |

| vector< map< size_t, int > > | NearRecvFromRank |

| vector< vector< size_t > > | FarSentToRank |

| vector< map< size_t, int > > | FarRecvFromRank |

| vector< ReadWrite > | NearRecvFrom |

| vector< ReadWrite > | FarRecvFrom |

Public Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > Public Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > | |

| SETUP | setup |

| size_t | n = 0 |

| size_t | m = 0 |

| size_t | depth = 0 |

| Lock | lock |

| vector< NODE * > | treelist |

| unordered_map< size_t, NODE * > | morton2node |

Additional Inherited Members | |

Static Public Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > Static Public Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > | |

| static const int | N_CHILDREN = 2 |

Protected Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > Protected Attributes inherited from hmlp::tree::Tree< SETUP, NODEDATA > | |

| vector< size_t > | global_indices |

This distributed tree inherits the shared memory tree with some additional MPI data structure and function call.

end class Node

| typedef Node<SETUP, NODEDATA> hmlp::mpitree::Tree< SETUP, NODEDATA >::MPINODE |

Define distributed tree node type as MPINODE.

| typedef tree::Node<SETUP, NODEDATA> hmlp::mpitree::Tree< SETUP, NODEDATA >::NODE |

Inherit parameters n, m, and depth; local treelists and morton2node map.

Explanation for the morton2node map in the distributed tree:

morton2node has type map<size_t, tree::Node>, but it actually contains "three" different kinds of tree nodes.

|

inline |

|

inline |

(Default) Tree destructor.

we do not free the last tree node, it will be deleted by hmlp::tree::Tree()::~Tree()

|

inline |

end GetTreePermutation() Perform approximate kappa neighbor search.

Get the problem size from setup->K->row().

k-by-N, column major.

Use leaf size = 4 * k.

Local problem size (assuming Round-Robin)

Edge case

Local problem size (assuming Round-Robin)

Edge case

Initial gids distribution (asssuming Round-Robin)

Allocate distributed tree nodes in advance.

Metric tree partitioning.

Query neighbors computed in CIDS distribution.

Pass in neighbor pointer.

Queries computed in CBLK distribution

Redistribute from CIDS to CBLK

Merge Q_cblk into NN (sort and remove duplication)

Metric tree partitioning.

Query neighbors computed in CIDS distribution.

Pass in neighbor pointer.

Overlap

Redistribute from CIDS to CBLK

Queries computed in CBLK distribution

Redistribute from CIDS to CBLK

Merge Q_cblk into NN (sort and remove duplication)

Check for illegle values.

|

inline |

end CleanUp() Allocate all distributed tree nodse.

Decide the depth of the distributed tree according to mpi size.

Allocate root( setup, n = 0, l = 0, parent = NULL ).

Push root to the mpi treelist.

Recursively spliiting the communicator.

Increase level.

Left color = 0, right color = 1.

Split and assign the subcommunicators for children.

Update mycomm, mysize, and myrank to proceed to the next iteration.

Create the child node.

Create the sibling in type NODE but not MPINODE.

Setup parent's children

Push to the mpi treelist

Global synchronization.

Allocate local tree nodes.

|

inline |

Get the total depth of the tree.

Number of total leaf nodes.

Create a 2^l-by-2^l table to check all interactions.

Now traverse all tree nodes (excluding the root).

Loop over all near interactions.

Loop over all far interactions.

Loop over all near interactions.

Loop over all far interactions.

Reduce

|

inline |

free all tree nodes including local tree nodes, distributed tree nodes and let nodes

Free all local tree nodes

Free all distributed tree nodes

|

inline |

TODO also clean up the LET node

|

inline |

end DependOnNearInteractions()

Describe the dependencies of rank p.

Try to enqueue if there is no dependency.

|

inline |

Describe the dependencies of rank p.

Try to enqueue if there is no dependency.

|

inline |

move to its child IMPORTANT: here we need to cast the pointer back to mpitree::Node*

|

inline |

For unordered traversal, we just call distributed downward traversal.

|

inline |

end LocaTraverseUp()

move to its parent

|

inline |

|

inline |

end AllocateNodes()

Sanity check using an 0:N-1 table.

|

inline |

end Morton2Rank()

|

inline |

end DistTraverseUp()

contain at lesat one tree node

traverse the local tree without the root

IMPORTANT: local root alias of the distributed leaf node IMPORTANT: here l must be int, size_t will wrap over

|

inline |

contain at lesat one tree node

|

inline |

For unordered traversal, we just call local downward traversal.

end LocaTraverseLeaf()

|

inline |

end Morton2Rank()

contain at lesat one tree node

traverse the local tree without the root

IMPORTANT: local root alias of the distributed leaf node IMPORTANT: here l must be int, size_t will wrap over

loop over each node at level-l

|

inline |

|

inline |

end TreePartition() Assign MortonID to each node recursively.

MPI Support.

Set the node MortonID.

Set my sibling's MortonID.

Compute MortonID recursively for the local tree.

Prepare to exchange all <gid,MortonID> pairs.

Gather pairs I own.

Exchange send_pairs.size().

Compute displacement for Allgatherv.

Sanity check for the total size.

Exchange all pairs.

Fill in all MortonIDs.

|

inline |

partition n points using a distributed binary tree.

Set up total problem size n and leaf node size m.

Initial gids distribution (asssuming Round-Robin).

Local problem size (assuming Round-Robin).

Allocate distributed tree nodes in advance.

Allocate space for point MortonID.

Compute Morton ID for both distributed and local trees.

Clean up the map.

Construct morton2node map for the local tree.

Construc morton2node map for the distributed tree.

| vector<MPINODE*> hmlp::mpitree::Tree< SETUP, NODEDATA >::mpitreelists |

Distribued tree nodes in the top-down order. Notice thay mpitreelist.back() is the root of the local tree.

i.e. mpitrelist.back() == treelist.front();

| vector<vector<size_t> > hmlp::mpitree::Tree< SETUP, NODEDATA >::NearSentToRank |

end DependOnFarInteractions() Interaction lists per rank

NearSentToRank[ p ] contains all near node MortonIDs sent to rank p. NearRecvFromRank[ p ] contains all near node MortonIDs recv from rank p. NearRecvFromRank[ p ][ morton ] = offset in the received vector.